This result is formulated in Shannon’s noiseless channel coding theorem ( Shannon 1918, Cover and Thomas 1991, Nielson and Chuang 2000) given later. Shannon showed that the original data can be reliably obtained from the compressed version only if the rate of compression is greater than the Shannon entropy. It quantifies the minimal physical resources needed to store data from a classical information source and provides a limit to which data can be compressed reliably (i.e., in a manner in which the original data can be recovered later with a low probability of error). From the point of view of classical information theory, the Shannon entropy has an important operational definition. Then the entropy of each random variable modeling the source is the same and can be denoted by H( X). Suppose that the random variables X 1, X 2,…, X n are independent and identically distributed (i.i.d.). In the next section, analogous quantities are introduced for quantum information and the corresponding properties are stated.

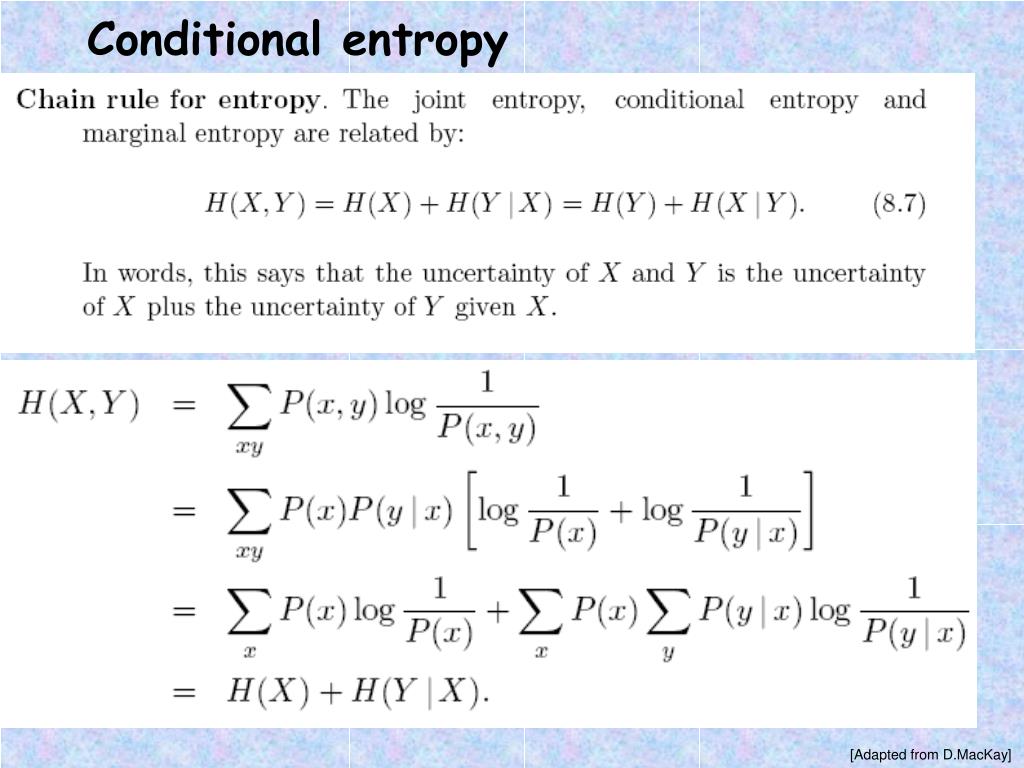

The latter property is called subadditivity. H( X 1,…, X n) is jointly concave in X 1,…, X n and 3. Two other important properties are as follows: 2. It is easy to see that 1.Ġ ≤ H ( X 1, …, X n ) ≤ n log | X |, where | X | denotes the number of letters in the alphabet X. See, for example, Cover and Thomas (1991) and Nielson and Chuang (2000). There are several other concepts of entropy, for example, relative entropy, conditional entropy, and mutual information.

0 kommentar(er)

0 kommentar(er)